In the past 4 years, I had the privilege working on DreamWorks Animation's brand new path tracer MoonRay(Lee 17) , helped transitioning the studio rendering workflow from rasterization / point based global illumination to ray tracing. We delivered the first MoonRay full length feature film: How To Train Your Dragon: The Hidden World at the end of 2018 and presented to the world in 2019. Since then, I joined Walt Disney Animation Studio, working on its in house renderer Hyperion(Eisenacher13). My first credit in WDAS is Frozen2, and it will be in theatre 11/22/2019. Helping two extremely beloved IPs in the way I wish the most to contribute feels surreal every time I step back a bit and look at it: The first how to train your dragon is easily one of my all time top 5 animations, I still have goosebumps whenever watching it. I also vividly remembered the day Frozen released in 2013 Thanksgiving holiday time, went to Burbank theater with grad school classmate to support friends working on the title. As the song dropped, that "classic 90s Disney is back!" thought kept lingering til the end credit moment, and we had no idea how crazy (well maybe some...but definitely no where close to what really happened later...) the Frozen phenomena will invade the whole world in the next few years. As a rendering fan boy, shipping this two films with two participated renderers in two all star studios is a definite dream come true moment for me, truely grateful for the opportunities!

MoonRay team with How To Train Your Dragon Director Dean DeBlois

As a rendering software developer, my main contribution for MoonRay spans across geometry and volume. Geometry side: intersection kernels for curves/points/quadrics/instancing, rotation/deformation motion blur, tessellator for subdivision and polygon mesh, a geometry shader api separating geometry generation and internal renderer representation (shader writers can develop geometry shaders without touching renderer internal structure), a swiss army knife like alembic geometry importer (as demonstration of geometry shader api, turns out also become the major geometry work horse for movie production), primitive variables system (fx artists love to use this to pump in all sorts of arbitrary data into shader graph) , renderer scene graph for BVH construction/manipulation, and a lighters demanded artistic control feature: shadow linking. Volume side: volume integrator, (multiple) importance sampling for scattering/emission effects, multiple scattering, a light path expression syntax based volume aov system and a random walk subsurface scattering skin shader.

Production's first play of emissive volume: "it's expensive to converge...."

emissive volume + thin fog: my inner voice screamed in pain when watched the storyboard screening

The dust in the air has a term: CIA (craps in the air)

A mixture of particle and instancing (Brian: "do they simply want to break the renderer?")

Lightning in the gloomy cloud, because why not!?

"How many levels of instancing prim vars you need?" "Maybe 4? oops can you make it 8? We just passed that limit"

The earliest in production sequence for testing multiple scattering cloudscape

You hear that Mr. Anderson? That is the sound of water splash with thousand bounces

Thick...white...emissive mist wall...purely brutal

A highly art directed shot that NUKE comes to rescue

Thin fog is actually surprisingly tricky for free path sampling

The rendering field is not that big. When your tech lead won an Oscar and has been around the field for decades, there are quite some chances to meet celebrities in the field. ("Gonna head to the restaurant! Iliyan will join us for the lunch" "Uh....you mean that VCM Iliyan!?") As a shameless fan boy (the kind of fan boy that will ask selfie or autograph), it feels really cool to chat with rock stars, asking questions that I was puzzled while reading papers, or existing limitations when tried the algorithms on real life software. At the same time, when Alice Cooper said "Hang out with us!", it's probably still natural reaction for Wayne to feel this way

I can't help but constantly think I am under qualifying for this cool gig. There was one time that I pair programming with Thomas Müller, just made me keep thinking: "wow you can use 10 times my salary to hire this guy and it will be well worth the price!" There was another time when I asked Yonghao Yue for his free flight sampling spatial acceleration work, happenly Tzu-Mao Li and Yuanming Hu joined the chat. After few minutes I really felt I can't keep up my pace understanding three genius' conversation... It sounds kinda silly but finding my existing purpose in this field is a thought popping up every now and then for me. My current answer to myself: there are still unsolved problems for talented researchers to solve, and there are inevitably not that cool but important work for a production renderer to function. Before the holy grail shows up, someone needs to understand users' problems, come up solutions/workarounds for users under reasonable budgets/time, and that's my job and existing purpose.

"Any question that I may help to answer?" - Marcos Fajardo

"Yes. Can I have a picture with Mr. Arnold? :)"

For studio like WDAS and DWA that make cartoons, the mindsets for artists using renderers are still quite painter style. Striking a balance between flexibility, ease of use, performance and code complexity is a surprisingly involving, and ongoing daily negotiation/communication process. Some of my DWA colleagues volunteered to light few production shots, to better understand why the users request certain features. There are also times WDAS lighters demonstrate the lighting setup, composting Nuke graph breakdown, so the developers know what can be done, what can not be done in render. I found these back and forth communications extremely valuable for the features evaluation and prioritization. There are also some really good books talking about digital lighting, rendering from artists standpoint, I particularly like the book "Lighting for Animation: The Art of Visual Storytelling" since it also contains the process of how artists addressing notes, interviews revealing that different artists can approach the same problem with differnt styles, and what artists want for the future generation renderer (interesting but not that surprising, most artists in interviews want real time interativity, but not so much on physics/math correctness)

And there are those moments: the chats while sitting with artists waiting for the scene loading to see the first pixel, the gossiping with developers from other studio in conferences, really struck me as surprise (well maybe not that surprising when hearing another guy talk about it again): "Hey that's not what those marketing guys said!" I feel I can write it down since they are both informative and amusing.

"I am going to bias every pixel I render! why you tell me I should use an unbiased algorithm?" "well you can easily measure the error with ground truth...oh wait you probably don't care about that..." plus, what renderer is actually truely unbiased? you specify ray depth...bias! you use firefly clamping...bias! you use a denoiser...bias! Any photon mapping family integrator...bias! any sort of culling...bias! bias! bias! My feeling is: what artists want is predictable (consistent) result, unbiased...not that much. MoonRay once wants to remove ray depth control and the VFX sup came yelling at us: "stop! don't steal my render time controller!" "even that means energy loss?" "of course! it's far better than fireflies!"

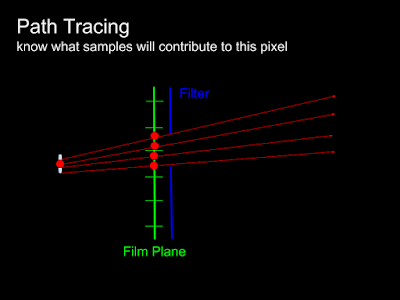

"Will my ultimate one for all integrator ever come?" At the first wave of path tracing for film industry revolution there still seems to be quite some interesting variations between different renderers. We saw advanced integrators like SPPM, MLT, VCM, BDPT and even UPBP on Renderman and Manuka, and nowadays the heat of integrator arm racing in industry seems cool down quite a bit. Yes the statement of "the complexity of production renderer makes maintaining advanced integrator techniques impractical" sounds like sour loser playing guitar:"that guy only has technique, but his song has no soul", but I guess once signed up for the production rendering gig, I am already classified as sold out pop rock musician (shower though: WETA is probably the Dream Theater in this case). When you have multiple integrators in the renderer and you use the path tracer for 95% of time, chances are the time you need the advanced secret weapon that thing doesn't always stay consistent with path tracer (especially when you have so many artistic control knobs (hacks) on path tracer that may not implemented in other integrators) Path guiding became potential "path tracing killer" in the past few year, but I think we are still not there yet.

RenderMan removed UPBP support in recent 22.0 release

"Can't you just do this in comp?" "If I can do that in render I prefer getting it done in one pass" My fragile ego likes to hear artists prefer rendering solution instead of comp solution, but every time I got request like "I only want to change this part but left all the other stuff untouched" I tend to dodge it to NUKE (in DWA we even have the fathers of NUKE Jonathan Egstad and Bill Spitzak for this part of expertise). The last minute adjustment is super powerful weapon for artist and so far NUKE seems to be the best one providing the flexibility. Our MoonRay tech lead Brian Green once joked about: "we should aim for making the fastest aov renderer that can handle thousands of aovs if I knew how heavy artists are relying on NUKE" Recently renderer like Corona adds on more and more image processing functionality in renderer, maybe that can be a good alternative of putting all the functionality in 3D rendering land. So far on this kind of feature requests negotiation, I keep stepping back: "no I'm not going to put this witchcraft in our code!" "well if that doesn't make the code ugly maybe?..." "Fine...I'll do that if it doesn't slow the renderer down (too much)..."

"People will get sick of this physically based rendering look in the next few years, and people will start advocating stylized rendering...like what we did in the last renderer..." "Meh...stop making excuse because we are incapable of doing the right thing!" And then Spider-Man: Into the Spider-Verse came, snatched the Oscar. While in theater, I felt I witnessed a part of history, and feel bad to the colleague made that prophecy :P I am always not the bsdf guy in the team so I feel I can't make that much comment here. Personal take: For look development, physically based shading provides predictable/consistent render across different lighting scenario. For lighting, you still want to have the per shot, per light adjustable backdoor to make director happy. Keep bsdf consistent. Keep light flexible.

"Uhh...how long does it take for this shot to load up so I can debug this ticket?" "You know what Wayne? I think all you rendering developers should at least once have this kind of taste to understand how miserable our life is! In some crazy shots it took more than an hour for us to see the first pixel!" When Max Liani presented XPU in SIGGRAPH2018 he pointed out: we can shoot out more and more rays when hardware advances, but the increasing loading time keep taking over all the time saved from ray tracing. For artistic iteration, we hope at least we only need to load the shot once and keep modifying it without relaunching the renderer. Depends on how much the renderer relying on precompute cache and the quality of BVH, this is not always possible though. There will never be fast enough renderer for artists. You give painters unlimited time, they'll change the painting everyday since they never feel it's good enough. For farm render, if artist need to go home and check the render tomorrow, whether it's 10 hours or 5 hours doesn't matter that much, only when you can get the time below 3 hours (Blizzard's Redshift workflow) so artists can have two dailies in an day that will change the workflow.

With all unsolved problems still on the road, I feel extremely lucky to be able to stand in the front row watching this ongoing rendering revolution. The TOG paper from Sony Arnold , Solid Angle Arnold , WETA Manuka , Disney Hyperion, Pixar RenderMan reveal so much information that was once not easily accessable, and the community people are so open to each other nowadays. It is a wonderful time to work on rendering :) Thanks all the friends and comrades at work (I am shy to say that at work, but Joe, Karl and Mark I am your big fan!) I hope I can keep helping artists making beautiful/inspiring movies to entertain you, and add some more contents to this ghost town.

Thanks Shenyao for supporting the movie in day 1 :)